The New Battlefield: From Search Rankings to AI Training

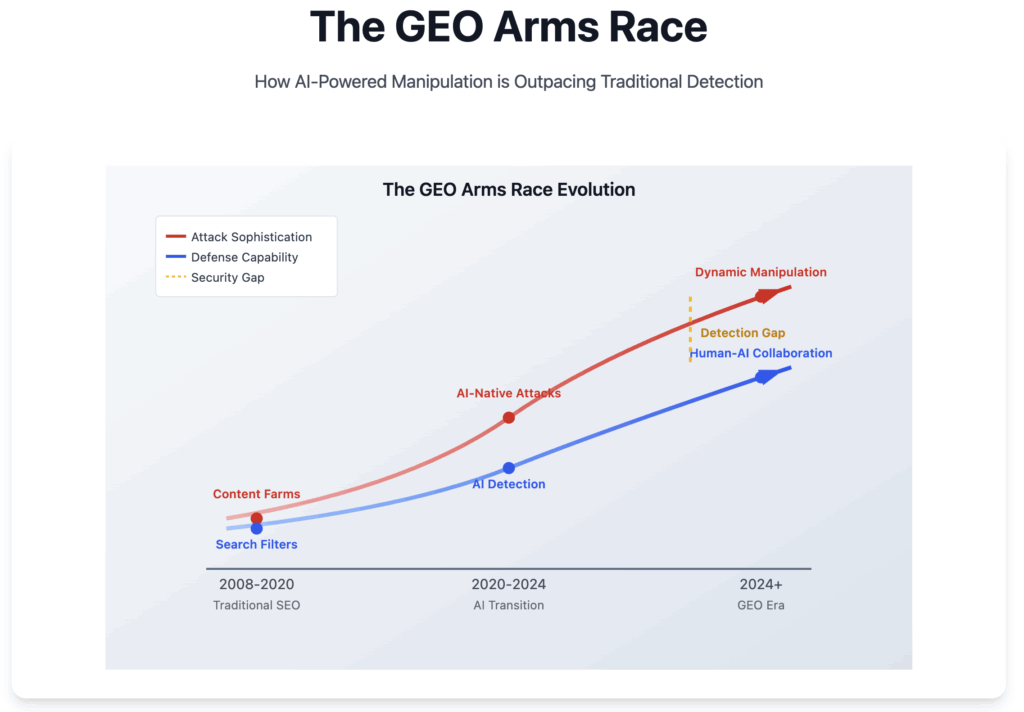

For fifteen years, we’ve watched an escalating arms race between search engines and those trying to game them. PageRank manipulation, link farms, keyword stuffing—search engines eventually caught up to each tactic. But now we’re facing something fundamentally different: Generative Engine Optimization (GEO) isn’t just about fooling algorithms that rank pages. It’s about poisoning the very knowledge that AI systems learn from.

The stakes have never been higher, and traditional detection methods are failing spectacularly.

The Evolution of AI-Targeted Manipulation

Generation 1: Content Farms Go AI-Native

Traditional content farms were obvious—thin content, keyword stuffing, poor grammar. Today’s AI-powered content farms are sophisticated operations that produce seemingly authoritative articles at unprecedented scale. These aren’t the gibberish farms of 2010. They’re creating content that passes traditional quality filters while subtly embedding manipulation signals designed specifically for LLM consumption.

We’re seeing networks that can generate thousands of topically-relevant articles daily, each one crafted to appear in LLM training datasets while carrying embedded biases or factual distortions. The content quality is often indistinguishable from legitimate sources—because the same AI tools creating the manipulation are being used by legitimate publishers.

Generation 2: Prompt Injection Through “Helpful” Sources

Perhaps the most insidious development is the emergence of sources that appear helpful and authoritative but contain hidden instructions designed to influence AI behavior. These aren’t obvious spam signals—they’re carefully crafted to exploit how LLMs process and prioritize information.

Consider a seemingly legitimate medical information site that provides accurate health advice but subtly frames certain treatments as more effective than clinical evidence supports. Or technical documentation that appears authoritative but contains biased recommendations that favor specific vendors or approaches. Traditional spam detection misses these entirely because the content quality is high and the manipulation is sophisticated.

Generation 3: Dynamic Manipulation at Scale

The newest frontier involves content that adapts in real-time based on how it’s being accessed. We’re tracking sources that present different content to AI crawlers versus human visitors, or that modify their messaging based on detected training runs versus inference queries.

This isn’t just cloaking—it’s intelligent manipulation that learns and adapts. Some sources are even experimenting with content that changes based on what other sites the crawler has recently visited, attempting to create contextual influence that compounds across multiple training sources.

Protecting Your Training Data Investment

While automated systems can process vast amounts of content, they struggle with the nuanced judgment calls that sophisticated manipulation requires. Human verification provides a critical defense layer—not for every source, but for the edge cases where millions of dollars in training investments hang in the balance.

The key is building systems that combine automated detection with human expertise at the right scale. When AI systems can identify potentially problematic sources, human reviewers can make the contextual judgments that determine whether subtle bias patterns represent legitimate perspective or systematic manipulation.

The Real Danger: Systematic Bias Injection

The goal of modern GEO isn’t to create obvious spam—it’s to subtly shift the knowledge base that AI systems learn from. Imagine thousands of sources that are 95% accurate but consistently present certain political viewpoints, commercial interests, or factual frameworks as more authoritative than they actually are.

The cumulative effect isn’t a few bad sources in training data—it’s a systematic skewing of how AI systems understand truth, authority, and reliability. This kind of manipulation can influence AI behavior in ways that are nearly impossible to detect after the fact.

What’s Coming Next

Our analysis suggests we’re moving toward even more sophisticated manipulation:

- Adversarial content optimization: Sources that specifically test their content against detection systems and iterate to evade detection

- Cross-platform coordination: Manipulation campaigns that coordinate across multiple platforms to create false consensus

- Real-time adaptation: Content that modifies itself based on detection attempts

The Infrastructure We Need

Fighting this arms race requires detection systems built specifically for the AI era:

- Pattern recognition at scale: Systems that can identify subtle bias patterns across millions of sources

- Behavioral analysis: Detection that focuses on systematic manipulation rather than content quality

- Human-AI collaboration: Combining automated detection with human expertise for nuanced judgment calls

- Real-time adaptation: Detection systems that evolve as quickly as the manipulation tactics

The future of AI trustworthiness depends on our ability to stay ahead of this arms race. Those building AI systems today need to assume their training data is under active attack—and build accordingly.

Leave a Reply